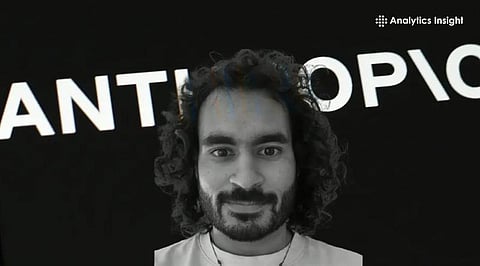

Anthropic’s AI safety lead Mrinank Sharma resigned from his role after leading the company’s safeguards research team. His crew focused on reducing risks from advanced artificial intelligence systems. Sharma’s departure was made public after he shared a detailed resignation letter last week.

In his exit note, Sharma wrote that ‘the world is in peril.’ He stressed that the danger does not come from AI alone. Instead, he pointed to a mix of global crises, including rapid technological progress, weak governance, and social instability. According to Sharma, humanity is building powerful tools faster than it can learn to use them wisely.

Sharma said he struggled to reconcile his personal values with the pace and direction of AI development. He suggested that safety operations usually take more time to complete than commercial operations require.

His remarks about Anthropic leaders created backlash against their executives, even though he did not actually disapprove. Researchers believe that rising competitive pressures will lead organizations to reduce their commitment to safety.

Anthropics’ mission to develop safer artificial intelligence systems faces practical challenges as Sharma leaves the company. The company's current situation requires evaluation of its ability to develop new AI technologies while maintaining its ethical obligations.

Also Read: Anthropic Sued by Indian Software Firm Over Name Usage

The world faces a critical moment in artificial intelligence, with governments and regulators currently working to establish AI regulations. Sharma’s voice in the discussions creates an immediate need for others to participate in the ongoing talks. His warning reflects growing unease among experts who fear that society is not prepared for the impact of advanced AI.

Sharma indicated he plans to step away from corporate AI work for now. He wants to focus on writing and to reflect more deeply on technology and ethics. His departure adds to a widening debate on whether current AI development paths are sustainable.