For years, progress in robotics has followed a familiar pattern. Researchers train increasingly powerful vision-language-action models in controlled environments, deploy them into the real world, and hope those models generalize well enough to handle whatever comes next. While this approach has produced capable robots, it has also revealed a fundamental limitation: once deployed, most robots stop learning without manual updates.

That limitation is becoming harder to ignore as robots move out of labs and into factories, homes, and public spaces. Real environments are messy, unpredictable, and constantly changing. A robot trained only on historical data will inevitably encounter situations it was never designed for. This is where AGIBOT’s Scalable Online Post-training (also known as a SOP), comes in.

AGIBOT SOP introduces a shift in how robots improve or refine their skills and abilities over time. Instead of treating deployment as the finish line, SOP treats it as the starting point for continuous learning.

Traditional robot training happens in two phases. First comes large-scale pre-training, where models learn general perception and reasoning from massive datasets. Then comes offline post-training, where robots are fine-tuned for specific tasks using carefully curated data or human demonstrations.

While effective in controlled settings, this approach struggles in the real world. Offline training can’t keep up with new environments, and task-specific fine-tuning often narrows a robot’s capabilities instead of expanding them. The result is robots that work well in theory but degrade when conditions change.

AGIBOT’s SOP takes a different approach. It enables robots to continue learning while they operate, using real-world experience as a constant source of training data.

At its core, AGIBOT’s SOP is built around three ideas: online learning, fleet-level coordination, and multi-task improvement.

First, robots learn online. Instead of waiting for engineers to collect data and retrain models offline, SOP allows robots to update their policies as they act in the real world. This dramatically reduces the gap between training conditions and actual deployment.

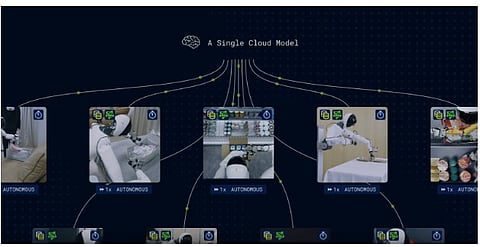

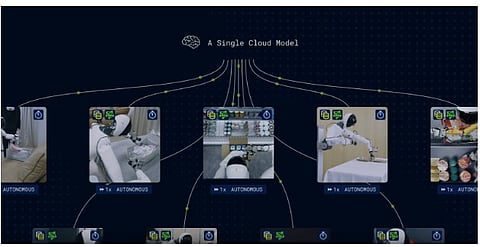

Second, learning happens across a fleet. Individual robots don’t improve in isolation. Data from multiple robots is aggregated in the cloud, where models are updated using powerful centralized computing resources. Those improvements are then shared back across the entire fleet. In effect, one robot’s experience becomes everyone’s experience.

Third, SOP supports learning across many tasks at once. This is critical for general-purpose robots. Improving performance on one task shouldn’t come at the expense of overall flexibility. SOP’s design helps preserve general intelligence while still driving task-specific gains.

Together, these components create a feedback loop: robots act, data flows to the cloud, models improve, and updates return to robots often within minutes.

In practical experiments, SOP has shown clear benefits. Robots using the framework demonstrated rapid improvements in complex manipulation tasks such as object assembly and cloth handling. With multiple robots learning in parallel, performance scaled efficiently as more robots were added to the system.

Perhaps more importantly, SOP enabled robots to operate autonomously for extended periods without constant human supervision. That kind of stability is essential if robots are to be trusted in real-world environments where downtime and manual intervention are costly.

SOP reflects a broader trend in artificial intelligence: systems are shifting from static models toward adaptive, continuously improving platforms. Just as modern software updates itself over time, robots are beginning to evolve after deployment.

This has major implications. Robots could adapt to new workplaces without extensive reprogramming. Fleets could learn faster than any single robot ever could. And real-world experience, which is long considered too messy to use effectively, could become one of the most valuable training resources available.

More broadly, SOP challenges the idea that intelligence must be “finished” before a system goes live. Instead, it suggests a future where robots grow smarter through use, not despite it.

As general-purpose robots move closer to everyday reality, frameworks like SOP may prove essential. It’s not just for improving performance, but for ensuring robots remain relevant, resilient, and reliable in a world that never stands still.