Free AI detectors can help content teams assess accuracy, false positives, and newsroom usability.

AI detectors show wide accuracy gaps, with some tools repeatedly flagging human content as machine-written.

Experts advise using multiple detectors alongside editorial judgement, rather than trusting automated results alone.

Artificial intelligence detection has quietly become a gatekeeping tool. Universities use it to flag AI-written assignments, while editors rely on it to screen submissions. Meanwhile, companies deploy it to enforce content policies. However, most users still don’t know how reliable these tools actually are.

I tested 7 widely used websites to understand how free artificial intelligence (AI) detectors perform in real-world conditions. I used these tools on three types of content: human-written journalism, raw AI-generated text, and AI content edited for tone and structure.

Here are the AI checkers I tested:

QuillBot functions as an integral part of its complete writing solution. The system successfully detected AI-dominated content; however, it failed to handle both opinion articles and storytelling compositions. The system offers quick access through its user-friendly interface, but its lack of a comprehensive explanation limits its effectiveness during official assessments. The system functions best as a tool for individual writers who need to evaluate their work before submitting it.

ZeroGPT provides immediate results while maintaining user-friendly access to its system. The platform successfully identified obvious AI-generated content; however, it made multiple false identifications on structured explainers and list-based content. The binary verdict system provides minimal disclosure, increasing the likelihood that users will misunderstand the outcome. The system enables rapid document assessment but fails to provide the detailed information needed for academic research and news reporting.

Scribbr delivered the most balanced results across all test cases. Human-written articles passed cleanly, while AI-heavy sections were flagged with probability-based scores. Scribbr avoided sweeping judgments and did not label entire documents as AI-generated due to isolated patterns. The free version lacks granular sentence-level detail; however, its conservative approach makes it suitable for academic and editorial settings.

GPTZero performed well when analysing raw, unedited AI text. However, accuracy declined once AI content was rewritten or structurally refined. For example, in several cases, clean, human-written explainers were misclassified as possibly AI-generated. Such misclassifications can be particularly problematic in journalism and academia, where a misclassification can be serious. GPTZero is best used as an initial screener, not a verification tool.

Also Read: Top Perplexity AI Detectors to Try

Content at Scale performed well on long-form, SEO-oriented articles. It identified AI patterns across entire documents rather than isolated sentences. However, its accuracy dropped in short news reports and opinion writing. The tool appears to be designed for marketers auditing bulk content rather than for journalists assessing individual stories.

Writer.com adopted a notably cautious approach. Instead of definitive labels, it issued probability-based warnings, especially for hybrid human-AI text. This reduces false positives and makes the tool suitable for organisations where reputational or legal risk is a concern. The feedback may feel limited for individual users; however, the restraint is deliberate.

Copyleaks showed the most advanced technical capabilities among all tested tools. The system identified traces of artificial intelligence, especially in paraphrased content. The platform restricts free usage, which makes it difficult for non-paying users to access its services. The detection system operated at a forensic analysis level, which made it suitable for institutional applications.

“I do not oppose AI detectors, but their limitations remain hard to ignore,” one journalist said, pointing to frequent misclassification of human-written content. Another was more direct: “AI detectors work on guesswork.” Several editors questioned whether a machine’s pattern-based ‘instinct’ can verify authenticity.

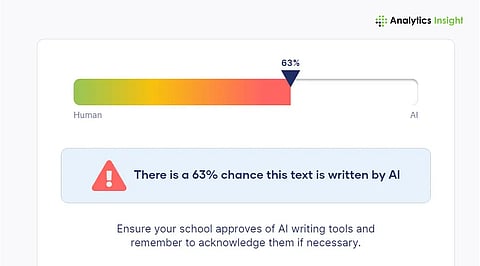

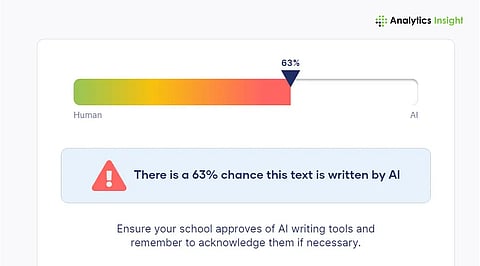

Others highlighted inconsistencies, where the same article scores 0% AI on one tool and 70% on another. A viral example of ‘Twinkle Twinkle Little Star’ being flagged as AI has further fueled scepticism.

Also Read: How to Use AI to Drive Productivity?

The testing highlights a critical point: AI detectors are indicators, not arbiters. They analyse linguistic patterns, rather than authorship or intent. Polished human writing can be flagged, while edited AI content can pass undetected. The educational system and journalism face danger because overreliance on a single tool can lead to unjust outcomes.

A safer approach is comparative use. Running content through multiple detectors, examining explanations, and applying human editorial judgment remain essential. As AI-generated text becomes harder to distinguish from human writing, detection software can assist decision-making but cannot replace it.

1. Are Free AI Detectors Accurate Enough for Academic or Newsroom Decisions?

Free AI detectors provide probability-based signals, not proof. They can assist with screening; however, they should never be the sole basis for disciplinary, editorial, or legal decisions.

2. Why do AI Detectors Sometimes Flag Human-Written Content As AI?

Detectors analyse patterns like structure and predictability. Highly polished, formal, or formulaic human writing can resemble AI output and trigger false positives.

3. Can AI Detectors Identify Edited or Paraphrased AI Content?

Some tools can detect patterns in lightly edited AI text. Heavily rewritten or hybrid content often bypasses detection, limiting reliability.

4. Is It Better to Use More Than One AI Detection Tool?

Yes. Using two or more detectors reduces reliance on a single algorithm and helps identify inconsistent or misleading results.

5. Do AI Detectors Check Plagiarism as Well?

No. AI detectors estimate authorship style. Plagiarism checkers compare text against existing sources. Both serve different purposes and should not be confused.