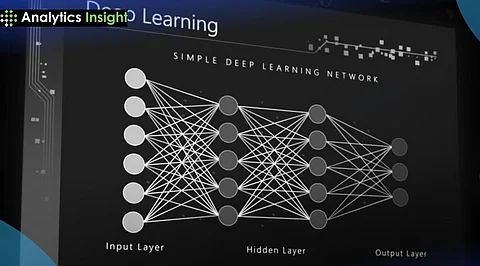

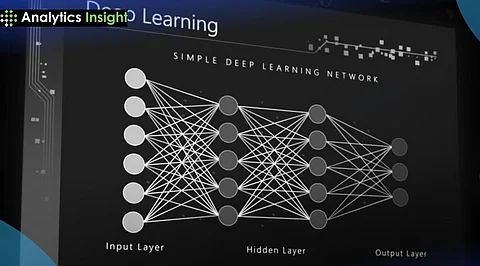

Deep learning is a subset of machine learning that utilizes artificial neural networks to model and understand complex patterns in large datasets. It mimics the way the human brain processes information through multiple layers of interconnected nodes, allowing systems to learn from vast amounts of data without explicit programming. This technique enables machines to perform tasks such as image recognition, natural language processing, and autonomous driving by analyzing and interpreting data at various levels of abstraction.

Description: MLPs are the simplest form of deep neural networks, consisting of multiple layers of interconnected nodes or "neurons." They are capable of learning both linear and non-linear relationships between data.

Applications: Used for classification, regression, and feature learning tasks.

Description: CNNs are designed to process data with grid-like topology, such as images. They use convolutional and pooling layers to extract features.

Applications: Primarily used for image classification, object detection, and image segmentation tasks.

Description: RNNs are suited for sequential data, such as time series data or natural language. They maintain a hidden state that captures information from previous inputs.

Applications: Commonly used in speech recognition, language modeling, and time-series forecasting.

Description: LSTMs are a type of RNN that uses memory cells and gates to handle long-term dependencies in data.

Applications: Effective in tasks requiring memory of past inputs, such as speech recognition and machine translation.

Description: GANs consist of two neural networks that contest with each other in a game framework. One generates new data, while the other evaluates the generated data.

Applications: Used for generating realistic images, videos, and other forms of data.

Description: DBNs are generative models composed of multiple layers of stochastic, latent variables. They are trained layer-by-layer using Restricted Boltzmann Machines.

Applications: Useful for feature extraction and dimensionality reduction.

Description: Transformers are primarily used for natural language processing tasks. They rely on self-attention mechanisms to weigh the importance of different input elements relative to each other.

Applications: Widely used in language translation, text summarization, and chatbots.

Description: Seq2Seq models use encoder-decoder architecture to process input sequences and generate output sequences. They often employ RNNs or Transformers.

Applications: Commonly used in machine translation and text summarization.

Analysis of Large Datasets: Deep learning algorithms can efficiently process and analyze large datasets, which is essential for extracting insights from the vast amounts of data generated today.

Improved Accuracy: Deep learning models can achieve high accuracy in tasks such as image recognition, speech recognition, and natural language processing, surpassing traditional machine learning techniques.

Automation and Efficiency: By automating tasks like data analysis and pattern recognition, deep learning can significantly enhance operational efficiency and reduce manual labor.

Cost Efficiency: Deep learning can help businesses save resources by automating complex tasks and improving predictive analytics, which aids in decision-making and risk management.

Innovation Across Industries: Deep learning has transformed industries such as healthcare, finance, and automotive by enabling applications like disease diagnosis, fraud detection, and self-driving cars.

Computer Vision: Used for image recognition, object detection, and facial recognition, which are crucial in surveillance, robotics, and self-driving vehicles.

Speech Recognition: Enables voice assistants like Siri and Alexa to understand and respond to voice commands.

Natural Language Processing (NLP): Powers chatbots, language translation, and text summarization tools.

Recommendation Systems: Used in e-commerce and streaming services to personalize user experiences.

Healthcare: Assists in medical imaging analysis and disease diagnosis.

Fraud Detection: Helps identify and prevent fraudulent activities in finance and e-commerce.

Medical Image Analysis: Deep learning algorithms analyze medical images like X-rays and MRIs to detect anomalies, improving diagnostic accuracy and reducing human error.

Predictive Analytics: Predicts disease outbreaks and patient deterioration, enabling early interventions.

Drug Discovery: Accelerates the drug discovery process by predicting molecular behavior and identifying potential drug candidates.

Fraud Detection: Analyzes transaction patterns to identify fraudulent activities, reducing financial losses.

Algorithmic Trading: Uses market trend analysis to execute trades at optimal times, enhancing profitability.

Credit Scoring: Improves credit scoring accuracy by assessing a broader range of data points.

Personalized Recommendations: Analyzes customer behavior to provide personalized product recommendations, increasing sales and customer satisfaction.

Inventory Management: Predicts demand to optimize inventory levels, minimizing stockouts and overstock situations.

Visual Search: Enables customers to search for products using images.

Object Detection: Uses computer vision to detect and respond to objects on the road, enhancing safety and navigation.

Chatbots and Virtual Assistants: Powers chatbots and virtual assistants to understand and respond to voice commands.

Language Translation: Enables real-time language translation, facilitating global communication.

Manufacturing

Predictive Maintenance: Analyzes sensor data to predict equipment failures, reducing downtime and improving efficiency.

Quality Control: Inspects products for defects using computer vision, ensuring high-quality output.

Crop Monitoring: Analyzes satellite and drone data to detect changes in crop health and predict yields.

Precision Farming: Optimizes crop yield by analyzing variables like soil moisture and temperature.

Content Personalization: Recommends content based on user preferences, enhancing user experience.

AI-Generated Content: Creates AI-generated music, videos, and stories.

Threat Detection: Identifies potential security threats by analyzing network traffic patterns.

Smart Grids: Analyzes energy consumption patterns to improve grid management and reduce outages.

Renewable Energy Forecasting: Predicts energy generation from renewable sources to manage supply effectively

Deep learning is used in various applications such as image recognition, natural language processing, speech recognition, and predictive analytics. It is also applied in industries like healthcare for medical diagnosis and in finance for fraud detection.

Neural networks are the core of deep learning, consisting of layers of interconnected nodes (neurons) that process inputs to produce outputs. They are trained on data to learn patterns and make predictions.

Backpropagation is a technique used to train neural networks by minimizing the error between predicted and actual outputs. It involves propagating errors backward through the network to adjust weights and improve performance.

Activation functions introduce non-linearity into neural networks, allowing them to learn complex relationships. Common activation functions include ReLU, Sigmoid, and Tanh.

Performance is evaluated using metrics such as accuracy, precision, recall, and F1-score for classification tasks, and RMSE for regression tasks. Model performance is compared against ground truth labels or baseline models.

Overfitting occurs when a model performs well on the training set but poorly on new data. Techniques to prevent overfitting include regularization, dropout, and early stopping.

Transfer learning involves using a pre-trained model as a starting point for a new task. It is beneficial when there is limited data available for the new task, as it leverages knowledge learned from a related task.

Challenges include the need for large datasets, high computational requirements, and potential issues like overfitting and vanishing gradients. Additionally, deep learning models can be difficult to interpret and require significant expertise to implement effectively.

Deep learning excels in handling complex, high-dimensional data and can automatically extract features, whereas traditional machine learning often requires manual feature engineering and is better suited for simpler tasks.