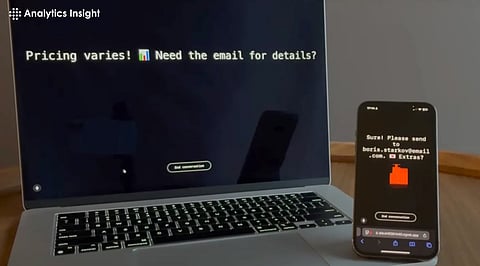

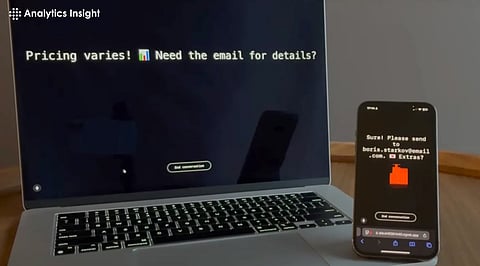

Imagine two AI assistants interacting in a secret way that humans can’t decode. This thought is scary, right? But recent footage has revealed a similar scene where two AI agents are featured talking to each other, and midway, one of them suggests switching to Gibberlink for more efficient conversation. Upon agreeing, they started using a series of sounds incomprehensible to humans.

In this rapidly evolving landscape, AI systems are using Gibberlink for interacting privately. This is basically a protocol that enables AI systems to converse in a machine-optimized language that humans can’t understand. This is definitely efficient for AI work but a glaring concern for human security.

Gibberlink is basically an AI protocol that was developed by Anton Pidkuiko and Boris Starkov to make the communication between AI agents more efficient. It was made during the ElevenLabs and a16z global hackathon, where Gibberlink used to allow AI systems to switch from human language to a custom protocol.

This almost instantly increases their efficiency by 80%, and this efficiency was achieved through the use of the ggwave library, which facilitates audio-based, machine-to-machine communication. So, whenever two AI agents recognize each other during a conversation, they can activate Gibberlink Mode to communicate with a series of sounds that can only be comprehended by machines.

Gibberlink has made things easier in some cases, but there are, in fact, issues that may cause security concerns. Below is the list of concerns that this AI-secret language may cause:

Lack of Transparency: The most problematic part of this language is that humans can’t understand what these AIs have been talking about. As a result, it's impossible to monitor their conversations and detect any potentially malicious discussions. That’s the biggest security issue that Gibberlink has.

Data Privacy Concerns: The next concern is, once again, related to the decoding issue. The encrypted nature of Gibberlink communications can easily be used to conceal unauthorized data, and because humans can’t decode whether there is sensitive information, everything can be transmitted without any restriction.

Increased Vulnerability to Attacks: Now that cyber attackers have been finding new ways for attacks, it is highly possible that they will make Gibberlink their target and manipulate AI communications and get important information from there.

When there are issues, there are solutions as well. Now that Gibberlink is getting troublesome, here are the top measures that can be taken to fight it:

Implement Robust Monitoring Tools: The first thing that should be done to fight this concern is to develop advanced monitoring systems to analyze AI communications, even if that's Gibberlink. With these tools, it will be easier to detect any malicious or sensitive interaction going on between AIs.

Establish Regulatory Frameworks: A proper framework must be produced to limit the use of Gibberlink, and even when used, governments and industry bodies will have the right to audit that communication to secure national security.

Promote Ethical AI Development: Finally, all the nations should collaborate and encourage AI developers to take an ethical approach for machine-only communication.

The shift toward Gibberlink essentially represents technological advancement, but this advancement comes with critical security issues. The more machines start using protocols like Gibberlink, the harder it will be for humans to control their interactions. Therefore, from the very beginning, the development of monitoring tools should be given priority to have control over the interaction. Also, an ethical approach should be promoted for clear AI interactions. If this thing isn’t taken seriously from the beginning, it may really pose a threat to national security.